Monkeys with typewriters can write Shakespeare but can machines create anime as well as humans?

The automation of a great deal of manual labour and factory work over the past few decades has, depending on how you look at it, either deprived honest hard-working humans of their livelihood or freed millions from a life of toil to focus on more important things. In changes to the working environment unprecedented since the Industrial Revolution, machines have taken on more and more jobs once done by people. Until very recently, though, computers or pieces of industrial machinery have been limited to repetitive actions limited to the parameters of their programming. It’s hard to feel worried when the most advanced robots in the world struggle to manage something as simple as walking, stumbling all over the place like drunken toddlers. Besides, we have something that differentiates us from animals and machines, the divine spark of creativity. Computers can’t write a poem or draw a cute moe anime character, right?

Actually, that last one might be about to change.

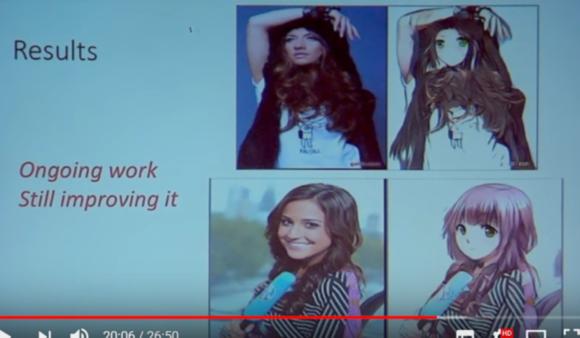

Using Deep Learning, an analytical learning method, an undergraduate from China’s Fudan University has been attempting to create a programme that can give photographs of real people an anime-style makeover. The theory is that each iteration of the programme, with its successes and failures, informs the next, so that its ability to create anime versions of people should only ever get better. Yanghua Jin, the student behind the project (whose anime art-creating AI we also looked at last year), discussed his work at a Deep Learning workshop held in Tokyo in March this year, and his presentation can be seen in the video below.

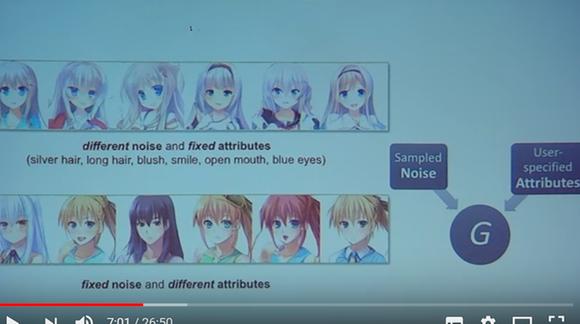

Jin introduces the concept of Generative Adversarial Networks (GAN), and their role in improving the project’s adaptation of photographs. Simply put, GAN uses two networks, one of which (known as the generator) is focused on producing anime images from photos by using attributes taken from anime images, as in the image from the presentation below. The generator studies a number of attributes of images, such as hair or eye colour, whether the hair is long or short, and whether the mouth is opened or closed. It also recognises ‘noise’, such as the proportions of eye size to the rest of the face, or the angle at which the figure is posing. After doing this, it produces anime versions of photos in an attempt to ‘fool’ the other network, the discriminator.

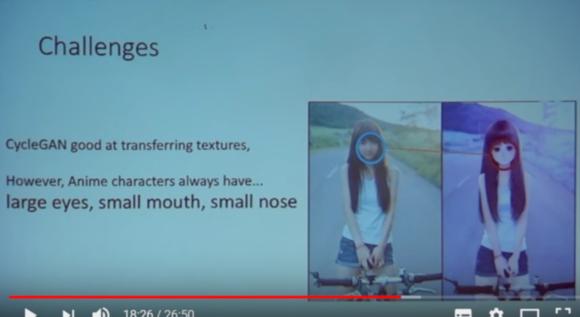

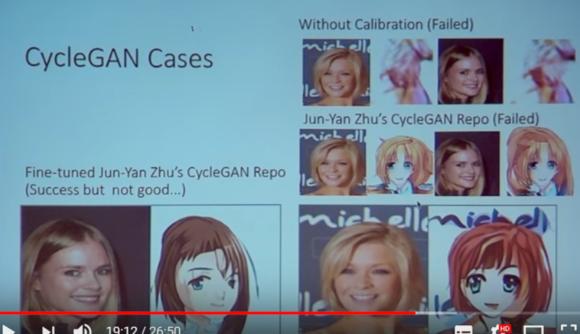

The discriminator then compares the images the generator has produced against its library of images to determine whether the image is synthetic or a genuine image. The two networks learn from their mistakes, the generator gradually becoming better at producing images, while the discriminator becomes better at judging whether images are successful or not. Jin explains that CycleGAN, a form of GAN software, has often been used successfully to apply different textures onto images or footage, and is used by companies that want to visualise things like interior decorating changes. He gives the example of video footage of a horse where zebra stripes have been applied to the video by the GAN process, and also explains how the irregular, unrealistic proportions of moe anime features presents problems when working with GAN.

The early versions, and uncalibrated images, wouldn’t have many professional artists all that worried, varying from abstract swirls of colours to characters with deformed features.

But within a relatively short space of time, later generations of the GAN was producing much more realistic images, such that the naked eye might struggle to tell whether the image had been drawn by hand or computer.

Since the images should only ever improve, it’s clear that this kind of technology can be successfully applied to a number of the creative industries. While this project is the work of an undergraduate student and his anime-loving friends, the potential is obvious. Not all Japanese anime lovers, though, seemed all that upset that computers might be taking over their beloved art form.

‘The progression is so quick, at first they looked like monsters, but now…’

‘If everything becomes computer-made that would be boring, but with this kind of genre it’s all right.’

‘So computers can make images. How long until they’re writing and drawing manga?’

‘Whoa, those computer-generated moe girls are really cute.’

‘How long before the computer gets a taste for it and starts producing hentai?’

With talented artists needed to create the data sets the programme bases its work on, or to supplement them with drawings of anime boys which Yanghua Jin explains are much harder to come across online, there might still be some jobs going in the anime industry in the future. For the rest of us, our job will be to feed and maintain our robot overlords, but at least we’ll be kept entertained with anime while we do it. Those hoping to get Japanese visas for anime work might also want to get a move on.

Source: YouTube/AIP RIKEN via Jin

Top image: Pixabay

Insert images: YouTube/AIP RIKEN

New anime AI program animates your art for you when you add a voice clip【Videos】

New anime AI program animates your art for you when you add a voice clip【Videos】 These dozens of beautiful Japanese idol singers aren’t just CG, they were created by AI【Video】

These dozens of beautiful Japanese idol singers aren’t just CG, they were created by AI【Video】 Video shows off hundreds of beautiful AI-created anime girls in less than a minute【Video】

Video shows off hundreds of beautiful AI-created anime girls in less than a minute【Video】 “Draw Asuka!” — A.I.-created doodles are adorable, but aren’t quite there yet…

“Draw Asuka!” — A.I.-created doodles are adorable, but aren’t quite there yet… Traditional Japanese ceramics given new life in AR, delivered straight to your web browser for free

Traditional Japanese ceramics given new life in AR, delivered straight to your web browser for free McDonald’s new Happy Meals offer up cute and practical Sanrio lifestyle goods

McDonald’s new Happy Meals offer up cute and practical Sanrio lifestyle goods More foreign tourists than ever before in history visited Japan last month

More foreign tourists than ever before in history visited Japan last month The oldest tunnel in Japan is believed to be haunted, and strange things happen when we go there

The oldest tunnel in Japan is believed to be haunted, and strange things happen when we go there Starbucks reopens at Shibuya Scramble Crossing with new look and design concept

Starbucks reopens at Shibuya Scramble Crossing with new look and design concept Is the new Shinkansen Train Desk ticket worth it?

Is the new Shinkansen Train Desk ticket worth it? Beautiful new Final Fantasy T-shirt collection on the way from Uniqlo【Photos】

Beautiful new Final Fantasy T-shirt collection on the way from Uniqlo【Photos】 Our reporter takes her 71-year-old mother to a visual kei concert for the first time

Our reporter takes her 71-year-old mother to a visual kei concert for the first time Randomly running into a great sushi lunch like this is one of the best things about eating in Tokyo

Randomly running into a great sushi lunch like this is one of the best things about eating in Tokyo We tried Japan’s Strawberry Daifuku? liqueur, one of three dessert-themed liqueurs

We tried Japan’s Strawberry Daifuku? liqueur, one of three dessert-themed liqueurs The 2023 Sanrio character popularity ranking results revealed

The 2023 Sanrio character popularity ranking results revealed Disney princesses get official manga makeovers for Manga Princess Cafe opening in Tokyo

Disney princesses get official manga makeovers for Manga Princess Cafe opening in Tokyo We try out “Chan Ramen”, an underground type of ramen popular in the ramen community

We try out “Chan Ramen”, an underground type of ramen popular in the ramen community Foreign English teachers in Japan pick their favorite Japanese-language phrases【Survey】

Foreign English teachers in Japan pick their favorite Japanese-language phrases【Survey】 There’s a park inside Japan where you can also see Japan inside the park

There’s a park inside Japan where you can also see Japan inside the park New Studio Ghibli bedding sets are cool in all senses of the word

New Studio Ghibli bedding sets are cool in all senses of the word Japanese convenience store packs a whole bento into an onigiri rice ball

Japanese convenience store packs a whole bento into an onigiri rice ball Hanton rice — a delicious regional food even most Japanese people don’t know about, but more should

Hanton rice — a delicious regional food even most Japanese people don’t know about, but more should New Pokémon cakes let you eat your way through Pikachu and all the Eevee evolutions

New Pokémon cakes let you eat your way through Pikachu and all the Eevee evolutions Hamburg and Hamburg Shibuya: A Japanese restaurant you need to put on your Tokyo itinerary

Hamburg and Hamburg Shibuya: A Japanese restaurant you need to put on your Tokyo itinerary Studio Ghibli releases Kiki’s Delivery Service chocolate cake pouches in Japan

Studio Ghibli releases Kiki’s Delivery Service chocolate cake pouches in Japan Japan’s bone-breaking and record-breaking roller coaster is permanently shutting down

Japan’s bone-breaking and record-breaking roller coaster is permanently shutting down New definition of “Japanese whiskey” goes into effect to prevent fakes from fooling overseas buyers

New definition of “Japanese whiskey” goes into effect to prevent fakes from fooling overseas buyers Foreign passenger shoves conductor on one of the last full runs for Japan’s Thunderbird train

Foreign passenger shoves conductor on one of the last full runs for Japan’s Thunderbird train Our Japanese reporter visits Costco in the U.S., finds super American and very Japanese things

Our Japanese reporter visits Costco in the U.S., finds super American and very Japanese things Kyoto bans tourists from geisha alleys in Gion, with fines for those who don’t follow rules

Kyoto bans tourists from geisha alleys in Gion, with fines for those who don’t follow rules Studio Ghibli unveils Mother’s Day gift set that captures the love in My Neighbour Totoro

Studio Ghibli unveils Mother’s Day gift set that captures the love in My Neighbour Totoro Domino’s Japan now sells…pizza ears?

Domino’s Japan now sells…pizza ears? New Japanese KitKat flavour stars Sanrio characters, including Hello Kitty

New Japanese KitKat flavour stars Sanrio characters, including Hello Kitty Kyoto creates new for-tourist buses to address overtourism with higher prices, faster rides

Kyoto creates new for-tourist buses to address overtourism with higher prices, faster rides Sales of Japan’s most convenient train ticket/shopping payment cards suspended indefinitely

Sales of Japan’s most convenient train ticket/shopping payment cards suspended indefinitely Sold-out Studio Ghibli desktop humidifiers are back so Totoro can help you through the dry season

Sold-out Studio Ghibli desktop humidifiers are back so Totoro can help you through the dry season Japanese government to make first change to romanization spelling rules since the 1950s

Japanese government to make first change to romanization spelling rules since the 1950s Ghibli founders Toshio Suzuki and Hayao Miyazaki contribute to Japanese whisky Totoro label design

Ghibli founders Toshio Suzuki and Hayao Miyazaki contribute to Japanese whisky Totoro label design Doraemon found buried at sea as scene from 1993 anime becomes real life【Photos】

Doraemon found buried at sea as scene from 1993 anime becomes real life【Photos】 Tokyo’s most famous Starbucks is closed

Tokyo’s most famous Starbucks is closed One Piece characters’ nationalities revealed, but fans have mixed opinions

One Piece characters’ nationalities revealed, but fans have mixed opinions We asked a Uniqlo employee what four things we should buy and their suggestions didn’t disappoint

We asked a Uniqlo employee what four things we should buy and their suggestions didn’t disappoint Princesses, fruits, and blacksmiths: Study reveals the 30 most unusual family names in Japan

Princesses, fruits, and blacksmiths: Study reveals the 30 most unusual family names in Japan Studio Ghibli’s new desktop Howl’s Moving Castle will take your stationery on an adventure

Studio Ghibli’s new desktop Howl’s Moving Castle will take your stationery on an adventure Japan’s ‘agri-tech’ farming revolution

Japan’s ‘agri-tech’ farming revolution Studio Ghibli finishes free-to-use image release with 250 from Nausicaa, Laputa, and more

Studio Ghibli finishes free-to-use image release with 250 from Nausicaa, Laputa, and more Virtual YouTuber cast as anime voice actress in new anime TV series in industry-first move【Video】

Virtual YouTuber cast as anime voice actress in new anime TV series in industry-first move【Video】 Taiwan pulls ahead of Japan in moe race, plasters subway cars with doe-eyed girls

Taiwan pulls ahead of Japan in moe race, plasters subway cars with doe-eyed girls What’s the highest-paying job in the anime industry?【Video】

What’s the highest-paying job in the anime industry?【Video】 Tekken producer explains virtual reality girl demo ‘Summer Lesson’

Tekken producer explains virtual reality girl demo ‘Summer Lesson’ Virtual reality Totoro? Project Morpheus team looking for artist with “Studio Ghibli” style

Virtual reality Totoro? Project Morpheus team looking for artist with “Studio Ghibli” style Ghibli’s Hayao Miyazaki says the anime industry’s problem is that it’s full of anime fans

Ghibli’s Hayao Miyazaki says the anime industry’s problem is that it’s full of anime fans Chinese artificial intelligence gets shut down for slamming the Communist Party

Chinese artificial intelligence gets shut down for slamming the Communist Party More than half of young anime workers live with their parents or receive money from them【Survey】

More than half of young anime workers live with their parents or receive money from them【Survey】 Studio Ghibli is not Studio Goro – Hayao Miyazaki’s son denies being his father’s successor

Studio Ghibli is not Studio Goro – Hayao Miyazaki’s son denies being his father’s successor Ultra-cute moe pilgrims embark on Shikoku’s 88-temple journey in new TV show

Ultra-cute moe pilgrims embark on Shikoku’s 88-temple journey in new TV show Artistic Japanese website AI has a long way to go before it can appropriately rate user’s Pikachu pics

Artistic Japanese website AI has a long way to go before it can appropriately rate user’s Pikachu pics These models don’t exist: New agency offers AI generated models for commercial use

These models don’t exist: New agency offers AI generated models for commercial use

Leave a Reply