Monkeys with typewriters can write Shakespeare but can machines create anime as well as humans?

The automation of a great deal of manual labour and factory work over the past few decades has, depending on how you look at it, either deprived honest hard-working humans of their livelihood or freed millions from a life of toil to focus on more important things. In changes to the working environment unprecedented since the Industrial Revolution, machines have taken on more and more jobs once done by people. Until very recently, though, computers or pieces of industrial machinery have been limited to repetitive actions limited to the parameters of their programming. It’s hard to feel worried when the most advanced robots in the world struggle to manage something as simple as walking, stumbling all over the place like drunken toddlers. Besides, we have something that differentiates us from animals and machines, the divine spark of creativity. Computers can’t write a poem or draw a cute moe anime character, right?

Actually, that last one might be about to change.

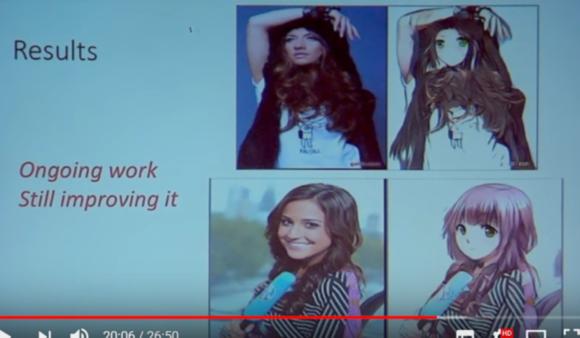

Using Deep Learning, an analytical learning method, an undergraduate from China’s Fudan University has been attempting to create a programme that can give photographs of real people an anime-style makeover. The theory is that each iteration of the programme, with its successes and failures, informs the next, so that its ability to create anime versions of people should only ever get better. Yanghua Jin, the student behind the project (whose anime art-creating AI we also looked at last year), discussed his work at a Deep Learning workshop held in Tokyo in March this year, and his presentation can be seen in the video below.

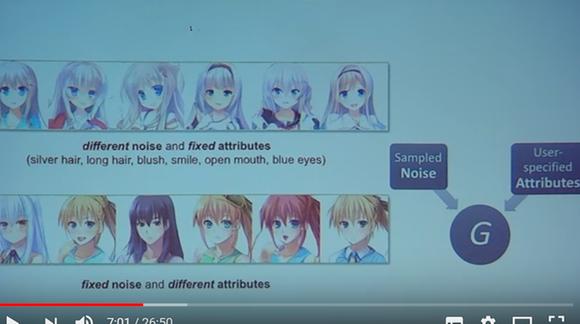

Jin introduces the concept of Generative Adversarial Networks (GAN), and their role in improving the project’s adaptation of photographs. Simply put, GAN uses two networks, one of which (known as the generator) is focused on producing anime images from photos by using attributes taken from anime images, as in the image from the presentation below. The generator studies a number of attributes of images, such as hair or eye colour, whether the hair is long or short, and whether the mouth is opened or closed. It also recognises ‘noise’, such as the proportions of eye size to the rest of the face, or the angle at which the figure is posing. After doing this, it produces anime versions of photos in an attempt to ‘fool’ the other network, the discriminator.

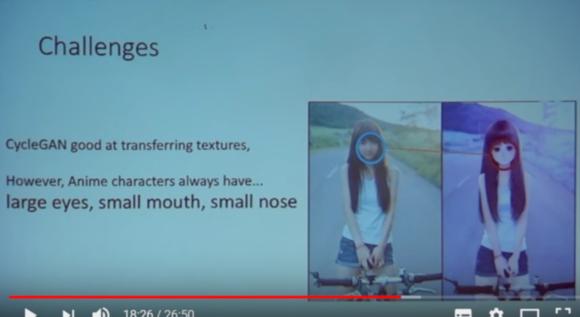

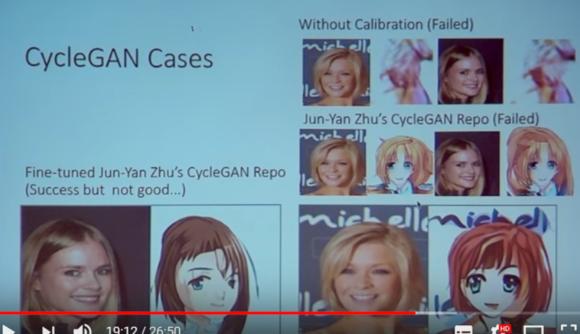

The discriminator then compares the images the generator has produced against its library of images to determine whether the image is synthetic or a genuine image. The two networks learn from their mistakes, the generator gradually becoming better at producing images, while the discriminator becomes better at judging whether images are successful or not. Jin explains that CycleGAN, a form of GAN software, has often been used successfully to apply different textures onto images or footage, and is used by companies that want to visualise things like interior decorating changes. He gives the example of video footage of a horse where zebra stripes have been applied to the video by the GAN process, and also explains how the irregular, unrealistic proportions of moe anime features presents problems when working with GAN.

The early versions, and uncalibrated images, wouldn’t have many professional artists all that worried, varying from abstract swirls of colours to characters with deformed features.

But within a relatively short space of time, later generations of the GAN was producing much more realistic images, such that the naked eye might struggle to tell whether the image had been drawn by hand or computer.

Since the images should only ever improve, it’s clear that this kind of technology can be successfully applied to a number of the creative industries. While this project is the work of an undergraduate student and his anime-loving friends, the potential is obvious. Not all Japanese anime lovers, though, seemed all that upset that computers might be taking over their beloved art form.

‘The progression is so quick, at first they looked like monsters, but now…’

‘If everything becomes computer-made that would be boring, but with this kind of genre it’s all right.’

‘So computers can make images. How long until they’re writing and drawing manga?’

‘Whoa, those computer-generated moe girls are really cute.’

‘How long before the computer gets a taste for it and starts producing hentai?’

With talented artists needed to create the data sets the programme bases its work on, or to supplement them with drawings of anime boys which Yanghua Jin explains are much harder to come across online, there might still be some jobs going in the anime industry in the future. For the rest of us, our job will be to feed and maintain our robot overlords, but at least we’ll be kept entertained with anime while we do it. Those hoping to get Japanese visas for anime work might also want to get a move on.

Source: YouTube/AIP RIKEN via Jin

Top image: Pixabay

Insert images: YouTube/AIP RIKEN

New anime AI program animates your art for you when you add a voice clip【Videos】

New anime AI program animates your art for you when you add a voice clip【Videos】 AI website’s automatic anime character designs are cute, quick, and free for anyone to create

AI website’s automatic anime character designs are cute, quick, and free for anyone to create AI GAN project from Wuhan students turns photos or videos into gorgeous cinematic anime visuals

AI GAN project from Wuhan students turns photos or videos into gorgeous cinematic anime visuals Fake idol neural network company explains its goals: “Humans and AIs creating together”

Fake idol neural network company explains its goals: “Humans and AIs creating together” Video shows off hundreds of beautiful AI-created anime girls in less than a minute【Video】

Video shows off hundreds of beautiful AI-created anime girls in less than a minute【Video】 Saitama is home to the best strawberries in Japan that you’ve probably never even heard of

Saitama is home to the best strawberries in Japan that you’ve probably never even heard of One Piece devil fruit ice cream coming back to Baskin-Robbins Japan

One Piece devil fruit ice cream coming back to Baskin-Robbins Japan Highest Starbucks in Japan set to open this spring in the Tokyo sky

Highest Starbucks in Japan set to open this spring in the Tokyo sky Tokyo Skytree turns pink for the cherry blossom season

Tokyo Skytree turns pink for the cherry blossom season Survey finds that one in five high schoolers don’t know who music legend Masaharu Fukuyama is

Survey finds that one in five high schoolers don’t know who music legend Masaharu Fukuyama is Japan now has official, working Pokémon mailbox you can mail letters from, Pokémon mail van too

Japan now has official, working Pokémon mailbox you can mail letters from, Pokémon mail van too Foreign tourists in Japan will get free Shinkansen tickets to promote regional tourism

Foreign tourists in Japan will get free Shinkansen tickets to promote regional tourism Downloads of 39-year-old Guns N’ Roses song increase 12,166 percent thanks to Gundam

Downloads of 39-year-old Guns N’ Roses song increase 12,166 percent thanks to Gundam Yakuzen ramen restaurant in Tokyo is very different to a yakuza ramen restaurant

Yakuzen ramen restaurant in Tokyo is very different to a yakuza ramen restaurant 2013 Doraemon Film’s Japanese TV Airing Gets Censored

2013 Doraemon Film’s Japanese TV Airing Gets Censored The 10 most annoying things foreign tourists do on Japanese trains, according to locals

The 10 most annoying things foreign tourists do on Japanese trains, according to locals Starbucks Japan releases new sakura goods and drinkware for cherry blossom season 2026

Starbucks Japan releases new sakura goods and drinkware for cherry blossom season 2026 Naruto and Converse team up for new line of shinobi sneakers[Photos]

Naruto and Converse team up for new line of shinobi sneakers[Photos] Is Sapporio’s Snow Festival awesome enough to be worth visiting even if you hate the snow? [Pics]

Is Sapporio’s Snow Festival awesome enough to be worth visiting even if you hate the snow? [Pics] Japan has trams that say “sorry” while they ride around town…but why?

Japan has trams that say “sorry” while they ride around town…but why? Sakura Totoro is here to get spring started early with adorable pouches and plushies

Sakura Totoro is here to get spring started early with adorable pouches and plushies Poop is in full bloom at the Unko Museums for cherry blossom season

Poop is in full bloom at the Unko Museums for cherry blossom season Shibuya Station’s Hachiko Gate and Yamanote Line stairway locations change next month

Shibuya Station’s Hachiko Gate and Yamanote Line stairway locations change next month Japan’s new “Cunte” contact lenses aren’t pronounced like you’re probably thinking they are

Japan’s new “Cunte” contact lenses aren’t pronounced like you’re probably thinking they are Japan’s newest Shinkansen has no seats…or passengers [Video]

Japan’s newest Shinkansen has no seats…or passengers [Video] Foreigners accounting for over 80 percent of off-course skiers needing rescue in Japan’s Hokkaido

Foreigners accounting for over 80 percent of off-course skiers needing rescue in Japan’s Hokkaido Super-salty pizza sends six kids to the hospital in Japan, linguistics blamed

Super-salty pizza sends six kids to the hospital in Japan, linguistics blamed Starbucks Japan unveils new sakura Frappuccino for cherry blossom season 2026

Starbucks Japan unveils new sakura Frappuccino for cherry blossom season 2026 Take a trip to Japan’s Dododo Land, the most irritating place on Earth

Take a trip to Japan’s Dododo Land, the most irritating place on Earth Is China’s don’t-go-to-Japan warning affecting the lines at a popular Tokyo gyukatsu restaurant?

Is China’s don’t-go-to-Japan warning affecting the lines at a popular Tokyo gyukatsu restaurant? Survey asks foreign tourists what bothered them in Japan, more than half gave same answer

Survey asks foreign tourists what bothered them in Japan, more than half gave same answer Japan’s human washing machines will go on sale to general public, demos to be held in Tokyo

Japan’s human washing machines will go on sale to general public, demos to be held in Tokyo Starbucks Japan releases new drinkware and goods for Valentine’s Day

Starbucks Japan releases new drinkware and goods for Valentine’s Day We deeply regret going into this tunnel on our walk in the mountains of Japan

We deeply regret going into this tunnel on our walk in the mountains of Japan Studio Ghibli releases Kodama forest spirits from Princess Mononoke to light up your home

Studio Ghibli releases Kodama forest spirits from Princess Mononoke to light up your home Major Japanese hotel chain says reservations via overseas booking sites may not be valid

Major Japanese hotel chain says reservations via overseas booking sites may not be valid Put sesame oil in your coffee? Japanese maker says it’s the best way to start your day【Taste test】

Put sesame oil in your coffee? Japanese maker says it’s the best way to start your day【Taste test】 No more using real katana for tourism activities, Japan’s National Police Agency says

No more using real katana for tourism activities, Japan’s National Police Agency says