The AI-network can change any landscape into a sun-bleached breezy anime backdrop while retaining key details.

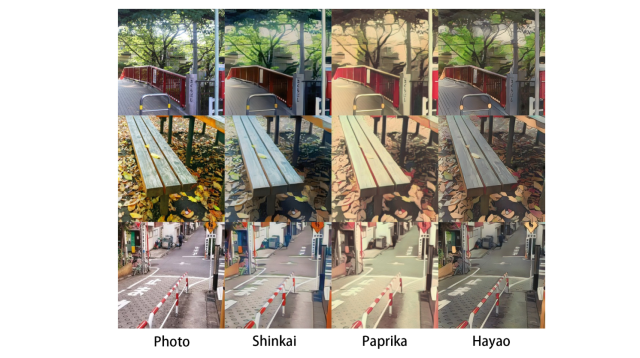

Opinions may vary, but personally I’ve always wished real life was just a touch more like it was in animated movies. Imagine tucking into a steaming slice of pie as rendered by Hayao Miyazaki in Kiki’s Delivery Service, or walking through the searing sunlight of Makoto Shinkai’s picturesque offerings. While the late Satoshi Kon’s content is less peaceful, there’s still something hypnotic about his swooping scenery and dream-like aesthetics. This is presumably why films from all three creatives were specially selected to train an AI program to convert photographs into anime-style art.

Jie Chen, Gang Liu and Xin Chen, students at Wuhan University and Hubei University of Technology, worked together to produce AnimeGAN — a new generated adversarial network (or GAN) to fix up the issues with existing photographic conversion into art-like images. As they stated in their original thesis, manually creating anime can be “laborious”, “difficult” and “time-consuming”, so having the option of converting photography would reduce workload and maybe even inspire more people to try their hand at producing anime.

▼ A photograph…

▼ And here’s how it looks after being put through AnimeGAN’s “Hayao” filter.

August 6 saw the release of AnimeGAN 2, which has been improved in various ways. The library of images the network draws upon to create a new interpretation has been updated with a host of new Blu-ray quality images; high-frequency artifacts have been reduced. Furthermore, it’s allegedly been made much easier to recreate the effects shown by the team in their original thesis.

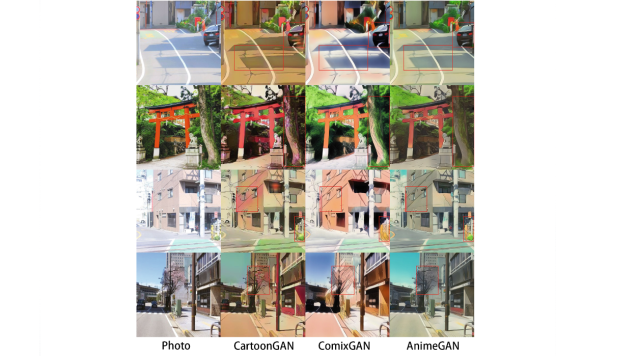

Where the network really shines is in its ability to retain the features of its source images. When contrasting the AnimeGAN network to existing, state-of-the-art AI, it’s apparent that some aspects of the photographs — trees, for instance, or windows — are smoothed and blurred so much as to become unrecognizable. AnimeGAN not only retains these finer details but takes less time to do so, as long as it’s been adequately trained!

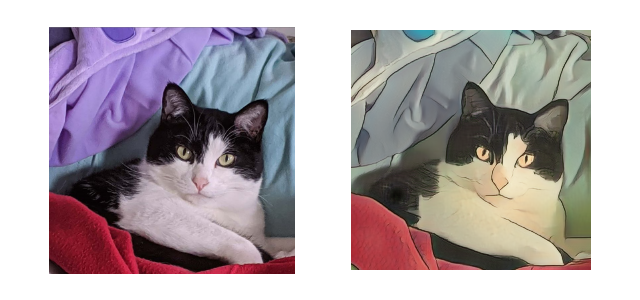

The best part is that you can test a version of the first AnimeGAN right now, with no need to download any additional software. This doesn’t allow you to differentiate between the three filters, but it still makes for a pretty cute photograph in its own right.

▼ As seen here, on my beautiful and photogenic cat.

I must admit, I can’t help but wonder about the naming of the filters. Obviously, each one stands for the image bank it was trained to use as a resource: “Hayao” is sourced from Hayao Miyazaki‘s The Wind Rises, while “Paprika” is from Satoshi Kon’s film of the same name; stills from Makoto Shinkai’s Your Name make up the “Shinkai” version. While alternating the choice of a director’s given name, surname and the film title is artsy, it does have the unintended effect of muddying both the clarity of the connection between the three filters as well as the credit for the film’s directors.

Still, since the network is apparently easy to train there’s nothing stopping people in the future from making their own Kon, Totoro, or Makoto filters; heck, maybe you could even render reality in the pastel pigments of Sailor Moon if you fed it enough images? The sky — and indeed, your own photographic skill — is the limit!

Source: GitHub/TachibanaYoshino/AnimeGAN (1, 2, 3)

Top image: GitHub/TachibanaYoshino/AnimeGAN

Insert images: GitHub/TachibanaYoshino/AnimeGAN (1, 2)

Cat images ©SoraNews24

● Want to hear about SoraNews24’s latest articles as soon as they’re published? Follow us on Facebook and Twitter

Starbucks Japan releases first-ever Hinamatsuri Girls’ Day Frappuccino

Starbucks Japan releases first-ever Hinamatsuri Girls’ Day Frappuccino Japan Extreme Budget Travel! A trip from Tokyo to Izumo for just 30,000 yen [Part 2]

Japan Extreme Budget Travel! A trip from Tokyo to Izumo for just 30,000 yen [Part 2] Japanese restaurant chain serves Dragon Ball donuts and Senzu Beans this spring

Japanese restaurant chain serves Dragon Ball donuts and Senzu Beans this spring Japan Extreme Budget Travel! A trip from Tokyo to Izumo for just 30,000 yen [Part 1]

Japan Extreme Budget Travel! A trip from Tokyo to Izumo for just 30,000 yen [Part 1] All 47 Starbucks Japan’s local Jimoto Frappuccinos, ranked by calorie count, plus what each one is

All 47 Starbucks Japan’s local Jimoto Frappuccinos, ranked by calorie count, plus what each one is Starbucks Japan releases first-ever Hinamatsuri Girls’ Day Frappuccino

Starbucks Japan releases first-ever Hinamatsuri Girls’ Day Frappuccino Japan Extreme Budget Travel! A trip from Tokyo to Izumo for just 30,000 yen [Part 2]

Japan Extreme Budget Travel! A trip from Tokyo to Izumo for just 30,000 yen [Part 2] Japanese restaurant chain serves Dragon Ball donuts and Senzu Beans this spring

Japanese restaurant chain serves Dragon Ball donuts and Senzu Beans this spring Japan Extreme Budget Travel! A trip from Tokyo to Izumo for just 30,000 yen [Part 1]

Japan Extreme Budget Travel! A trip from Tokyo to Izumo for just 30,000 yen [Part 1] All 47 Starbucks Japan’s local Jimoto Frappuccinos, ranked by calorie count, plus what each one is

All 47 Starbucks Japan’s local Jimoto Frappuccinos, ranked by calorie count, plus what each one is Crane games in Japanese convenience stores getting more and more popular, especially with tourists

Crane games in Japanese convenience stores getting more and more popular, especially with tourists Does this video about an abandoned dog leave you wiping your eyes or shaking your fist?

Does this video about an abandoned dog leave you wiping your eyes or shaking your fist? Meet Asuna, the hyperreal android that will leave your jaw hanging 【Video】

Meet Asuna, the hyperreal android that will leave your jaw hanging 【Video】 Japan’s new luxury sightseeing train will show you part of the country most foreigners never see

Japan’s new luxury sightseeing train will show you part of the country most foreigners never see 7-Eleven Japan’s sakura sweets season is underway right now!

7-Eleven Japan’s sakura sweets season is underway right now! The 10 most annoying things foreign tourists do on Japanese trains, according to locals

The 10 most annoying things foreign tourists do on Japanese trains, according to locals Highest Starbucks in Japan set to open this spring in the Tokyo sky

Highest Starbucks in Japan set to open this spring in the Tokyo sky Tokyo Skytree turns pink for the cherry blossom season

Tokyo Skytree turns pink for the cherry blossom season Starbucks Japan releases new sakura goods and drinkware for cherry blossom season 2026

Starbucks Japan releases new sakura goods and drinkware for cherry blossom season 2026 Japan’s new “Cunte” contact lenses aren’t pronounced like you’re probably thinking they are

Japan’s new “Cunte” contact lenses aren’t pronounced like you’re probably thinking they are Shibuya Station’s Hachiko Gate and Yamanote Line stairway locations change next month

Shibuya Station’s Hachiko Gate and Yamanote Line stairway locations change next month Yakuzen ramen restaurant in Tokyo is very different to a yakuza ramen restaurant

Yakuzen ramen restaurant in Tokyo is very different to a yakuza ramen restaurant Starbucks Japan adds new sakura Frappuccino and cherry blossom drinks to the menu

Starbucks Japan adds new sakura Frappuccino and cherry blossom drinks to the menu Japan just had its first same-month foreign tourist decrease in four years

Japan just had its first same-month foreign tourist decrease in four years Burning through cash just to throw things away tops list of headaches when moving house in Japan

Burning through cash just to throw things away tops list of headaches when moving house in Japan Japan’s newest Shinkansen has no seats…or passengers [Video]

Japan’s newest Shinkansen has no seats…or passengers [Video] Foreigners accounting for over 80 percent of off-course skiers needing rescue in Japan’s Hokkaido

Foreigners accounting for over 80 percent of off-course skiers needing rescue in Japan’s Hokkaido Super-salty pizza sends six kids to the hospital in Japan, linguistics blamed

Super-salty pizza sends six kids to the hospital in Japan, linguistics blamed Starbucks Japan unveils new sakura Frappuccino for cherry blossom season 2026

Starbucks Japan unveils new sakura Frappuccino for cherry blossom season 2026 Foreign tourists in Japan will get free Shinkansen tickets to promote regional tourism

Foreign tourists in Japan will get free Shinkansen tickets to promote regional tourism Take a trip to Japan’s Dododo Land, the most irritating place on Earth

Take a trip to Japan’s Dododo Land, the most irritating place on Earth Naruto and Converse team up for new line of shinobi sneakers[Photos]

Naruto and Converse team up for new line of shinobi sneakers[Photos] Is China’s don’t-go-to-Japan warning affecting the lines at a popular Tokyo gyukatsu restaurant?

Is China’s don’t-go-to-Japan warning affecting the lines at a popular Tokyo gyukatsu restaurant? Survey asks foreign tourists what bothered them in Japan, more than half gave same answer

Survey asks foreign tourists what bothered them in Japan, more than half gave same answer Japan’s human washing machines will go on sale to general public, demos to be held in Tokyo

Japan’s human washing machines will go on sale to general public, demos to be held in Tokyo Starbucks Japan releases new drinkware and goods for Valentine’s Day

Starbucks Japan releases new drinkware and goods for Valentine’s Day We deeply regret going into this tunnel on our walk in the mountains of Japan

We deeply regret going into this tunnel on our walk in the mountains of Japan Studio Ghibli releases Kodama forest spirits from Princess Mononoke to light up your home

Studio Ghibli releases Kodama forest spirits from Princess Mononoke to light up your home Major Japanese hotel chain says reservations via overseas booking sites may not be valid

Major Japanese hotel chain says reservations via overseas booking sites may not be valid Put sesame oil in your coffee? Japanese maker says it’s the best way to start your day【Taste test】

Put sesame oil in your coffee? Japanese maker says it’s the best way to start your day【Taste test】 No more using real katana for tourism activities, Japan’s National Police Agency says

No more using real katana for tourism activities, Japan’s National Police Agency says Crane games in Japanese convenience stores getting more and more popular, especially with tourists

Crane games in Japanese convenience stores getting more and more popular, especially with tourists Does this video about an abandoned dog leave you wiping your eyes or shaking your fist?

Does this video about an abandoned dog leave you wiping your eyes or shaking your fist? Meet Asuna, the hyperreal android that will leave your jaw hanging 【Video】

Meet Asuna, the hyperreal android that will leave your jaw hanging 【Video】 Japan’s new luxury sightseeing train will show you part of the country most foreigners never see

Japan’s new luxury sightseeing train will show you part of the country most foreigners never see 7-Eleven Japan’s sakura sweets season is underway right now!

7-Eleven Japan’s sakura sweets season is underway right now! Godzilla-shaped ice cream on sale in Tokyo near the sight his most adorable rampage

Godzilla-shaped ice cream on sale in Tokyo near the sight his most adorable rampage Tokyo Station staff share their top 10 favorite ekiben

Tokyo Station staff share their top 10 favorite ekiben Japanese mom considers cutting off financial support for son who wants to make porn games

Japanese mom considers cutting off financial support for son who wants to make porn games Are high-end convenience store rice balls really packed with more ingredients?

Are high-end convenience store rice balls really packed with more ingredients? Ghibli’s Kiki’s Delivery Service returns to theaters with first-ever IMAX screenings and remaster

Ghibli’s Kiki’s Delivery Service returns to theaters with first-ever IMAX screenings and remaster Time-slipping travel at a roadside retro spot in northern east Japan【Kita Kanto Brothers】

Time-slipping travel at a roadside retro spot in northern east Japan【Kita Kanto Brothers】 Did this animated Korean movie rip off Ghibli’s ‘Spirited Away’?

Did this animated Korean movie rip off Ghibli’s ‘Spirited Away’? Family Mart service brings local Hokkaido goods to your local convenience store this month

Family Mart service brings local Hokkaido goods to your local convenience store this month